There is an interesting quote from George Box, a British statistician, who said: “All models are wrong, but some are useful”. He was obviously spot on there, and it doesn’t only apply to statistical models, but all kinds of scientific models, which are all approximations to start with, i.e. they can’t and won’t represent the whole complexity or reality of whatever object, event, system, etc. is being modeled.

They are, however, useful (to a certain extent) and can still be used to represent certain aspects of that reality. In machine learning, anybody who has worked on building a model understands that similar data and model can lead to different results, each representing different aspects, and possibly reflecting distinct underlying elements of the problem at hand.

In addition to only being approximations, we also know they are vulnerable. Most machine learning models for instance have been shown to be more or less easily manipulated, leading to failure or different behavior. Intuitively we can understand why; they weren’t designed to produce ‘good’ outputs for every possible input. It is not possible and this is why there is still and will possibly always be a gap in preventing machine learning adversarial attacks.

Now, these aspects are not specific to cybersecurity and can apply to any other area of science where machine learning is being used. Indeed, inherent issues in models, adversarial threats, data quality, sample complexity issues, etc. are all challenges that can be found in any domain area. An inherent difference of cybersecurity to other domains though is that input data cannot be as easily manipulated or replaced, including by skilled adversaries. Customer information data used in marketing for instance is much more reliable than the telemetry collected off of running software (as in most customers are not actively trying to hide their activity or make the marketer believe they are doing something else!).

How useful?

Most available data about how useful these models are is anecdotal. There is no data on overall detection attribution, only reports on how machine learning models have detected this or that attack or campaign, and sometimes their initial evaluation metrics, e.g. prediction accuracy (and mainly in academic papers), which doesn’t mean much with regard to their actual behavior and performance in the wild. Even when testing vendors talk about ‘behavioral block’ (as opposed to ‘signature block’ for instance), it doesn’t mean it was a machine learning model as it could have been any sort of expert-generated heuristic or rule.

Having personally worked on the design and implementation of machine learning models for Symantec’s ATP, I can confirm that triaging detection data is not trivial. It requires expertise and a fair amount of time and manual work to investigate detections and potential attacks. In reality, it is also hard to effectively establish the performance of the models in the wild except for minimizing known (and clear-cut) FPs, or improve their detection rate compared to something, e.g. the rest of your detection stack. We have no idea about what we missed as well (FNs). Add to that the constantly evolving threat landscape and you can end up with obsolete models in a few weeks’ time.

We have been saying for years that machine learning can enhance defenses and ‘learn’ how to automatically generalize to detect either similar or unusual patterns, but we haven’t effectively measured it. For instance, what is the proportion of previously unseen variations of known threats that are picked up by machine learning models? Or what is the proportion/ number of totally new and unrelated to any known threat (zero-days) that were discovered by machine learning models? We do not have a clear idea there.

A recent blog from Project Zero describes the detection deficit of zero-days used in the wild and provides some statistics that might give some indication about the performance of these models. The most worrying sign for me was the absence of vendors (either security vendors or those targeted by these zero-days) that are best positioned to detect those threats, given their access to the data and all the supposedly ‘advanced’ machine learning models deployed. Among security vendors, only Kaspersky made the list with 4 zero-days discovered last year (out of 20).

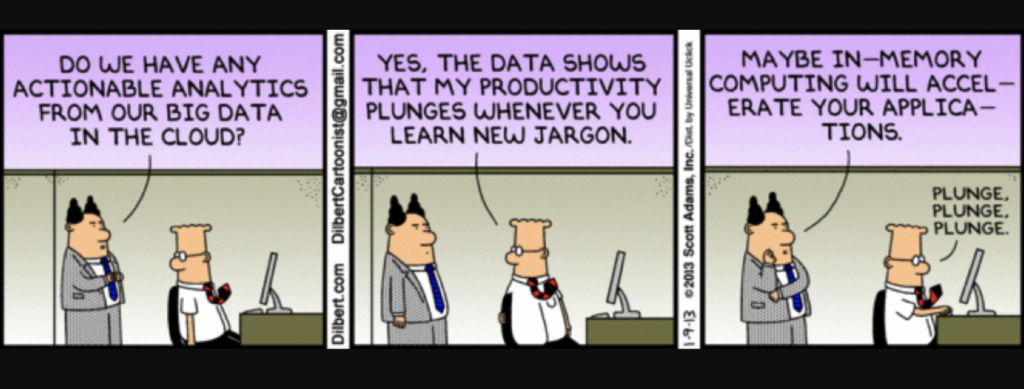

I believe this is partly a resource issue, i.e. vendors are not putting in enough resources to investigate the hits and performance of models in the wild. But the reality is that machine learning as a detection tool has been overestimated and is still heavily reliant on human experts. Machine learning on itself will not change the cybersecurity landscape without the right setup, including frequent and dedicated expert input, which is currently not the case. Vendors were looking for a new marketing tool and few quick wins with machine learning, but there is still a long road to making a real difference in the cybersecurity domain.